Due to the cost of sensors ranging from $15 to $1, car manufacturers want to know how many sensors are needed for fully autonomous driving of vehicles.

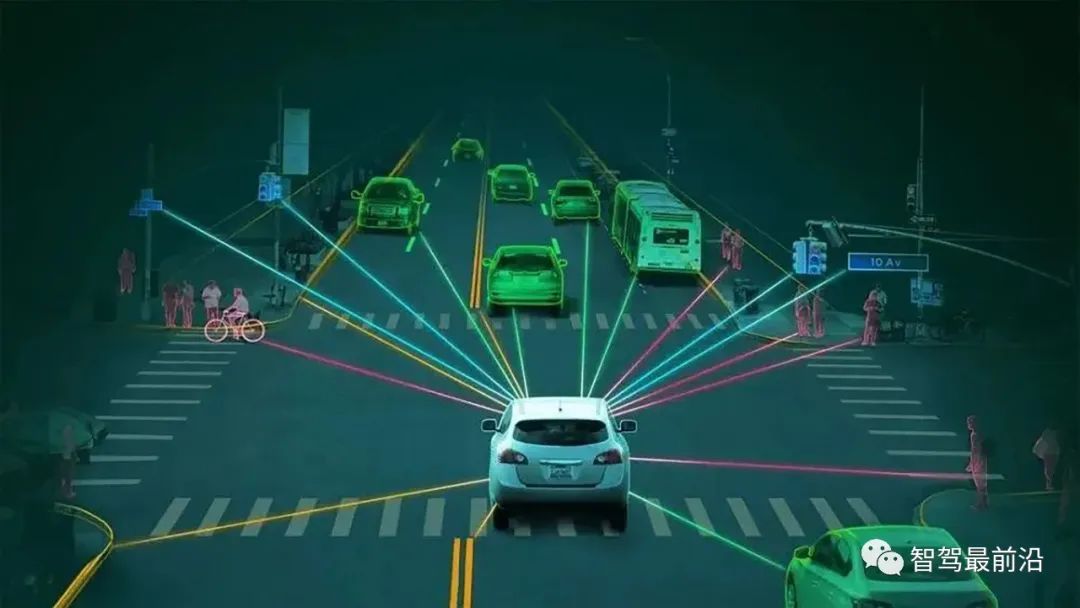

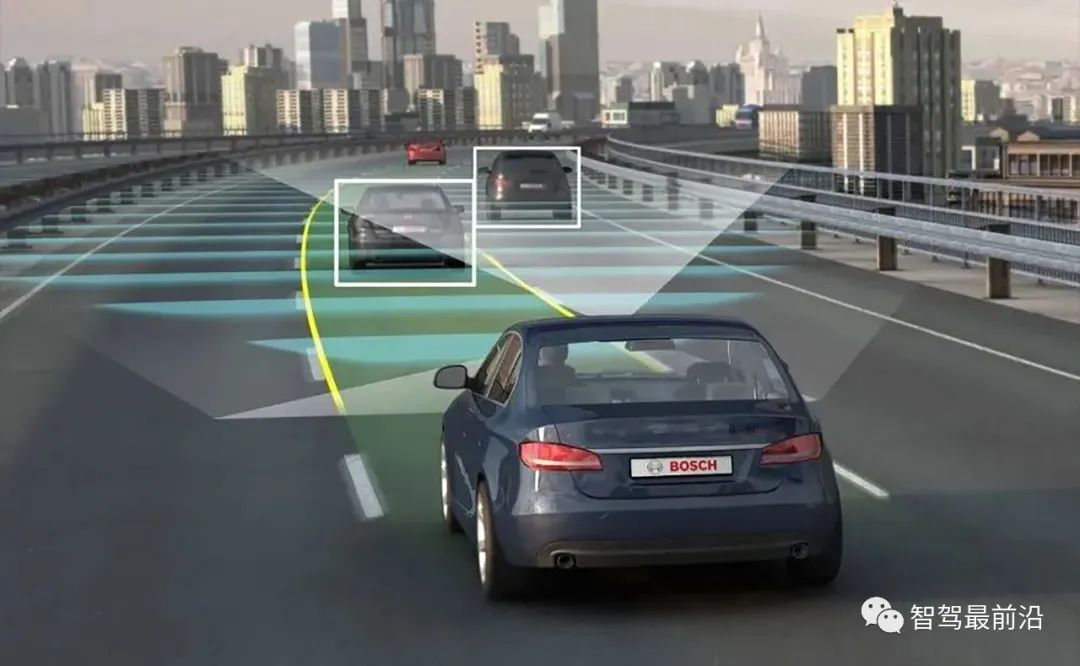

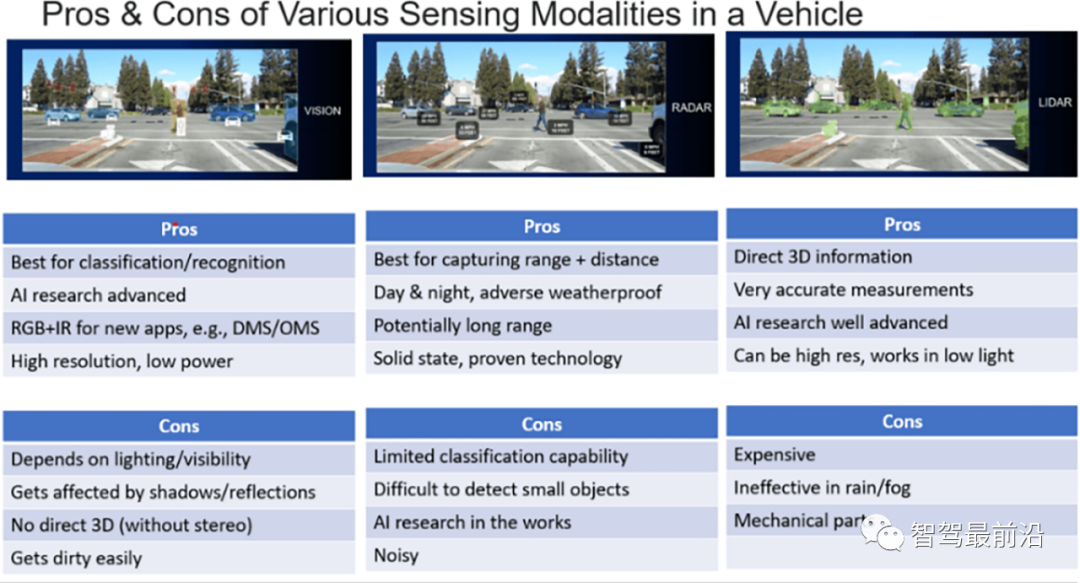

These sensors are used to collect data about the surrounding environment, including images, LiDAR, radar, ultrasound, and thermal sensors. One type of sensor is not enough, as each sensor has its own limitations. This is the key driving force behind sensor fusion, which combines multiple types of sensors to achieve safe autonomous driving.

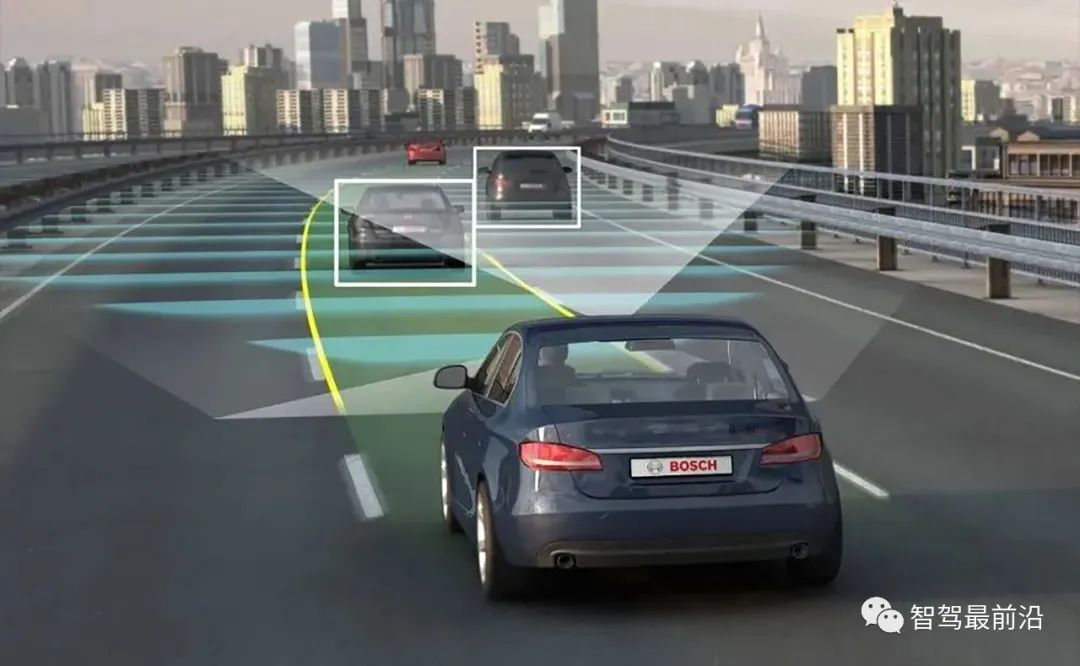

All L2 level or higher vehicles rely on sensors to "see" the surrounding environment and perform tasks such as lane centering, adaptive cruise control, emergency braking, and blind spot warning. So far, original equipment manufacturers are adopting very different design and deployment methods.

In May 2022, Mercedes Benz launched its first vehicle in Germany capable of achieving L3 level autonomous driving. L3 level autonomous driving is an option for S-level and EQS, and is planned to be launched in the United States in 2024.

According to the company, the DRIVE PILOT based on the driver assistance kit (radar and camera) has added new sensors, including LiDAR, advanced stereo cameras for the front window, and multifunctional cameras for the rear window. We have also added microphones (especially for detecting emergency vehicles) and humidity sensors in the front cab. A total of 30 sensors were installed to capture the data required for safe autonomous driving.

Tesla is taking a different path. In 2021, Tesla announced that Model 3 and Model Y will implement Tesla's vision camera autonomous driving technology strategy, which will be implemented on Model S and Model X in 2022. The company also decided to dismantle the ultrasonic sensor.

Sensor limitations

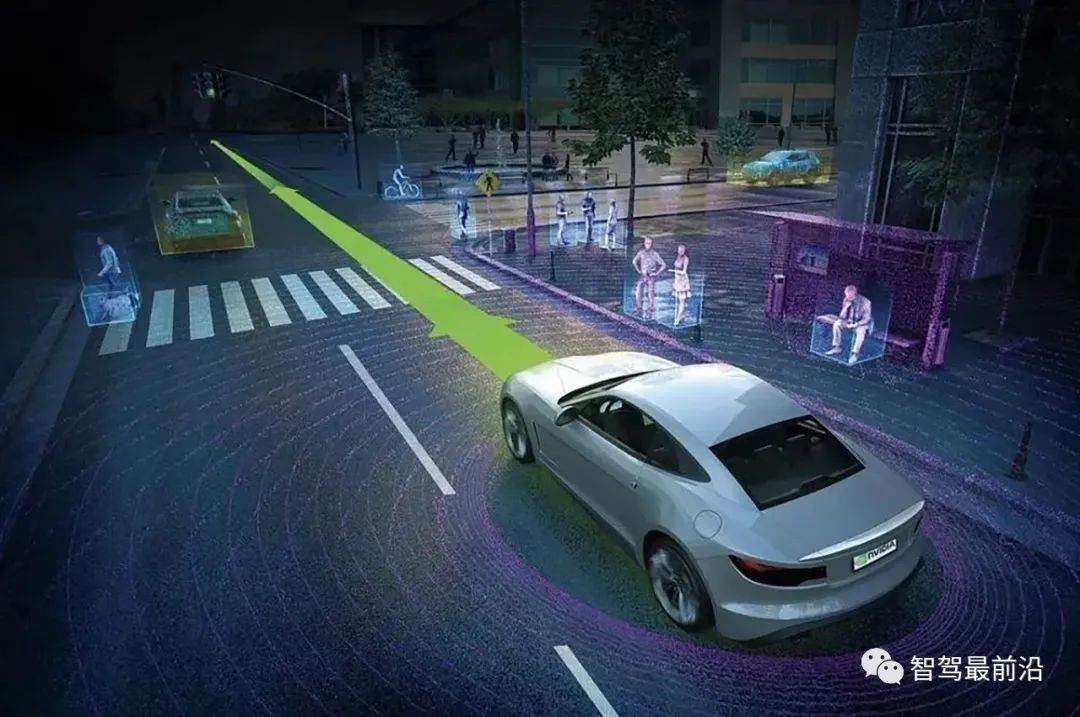

One of the challenges facing autopilot design today is the limitations of different sensors. To achieve safe autonomous driving, sensor fusion may be necessary. The key issue is not only the number, type, and deployment location of sensors, but also how AI/ML technology should interact with sensors to analyze data for optimal driving decisions.

To overcome the limitations of sensors, sensor fusion may be necessary, combining multiple sensors together for autonomous driving to achieve optimal performance and safety.

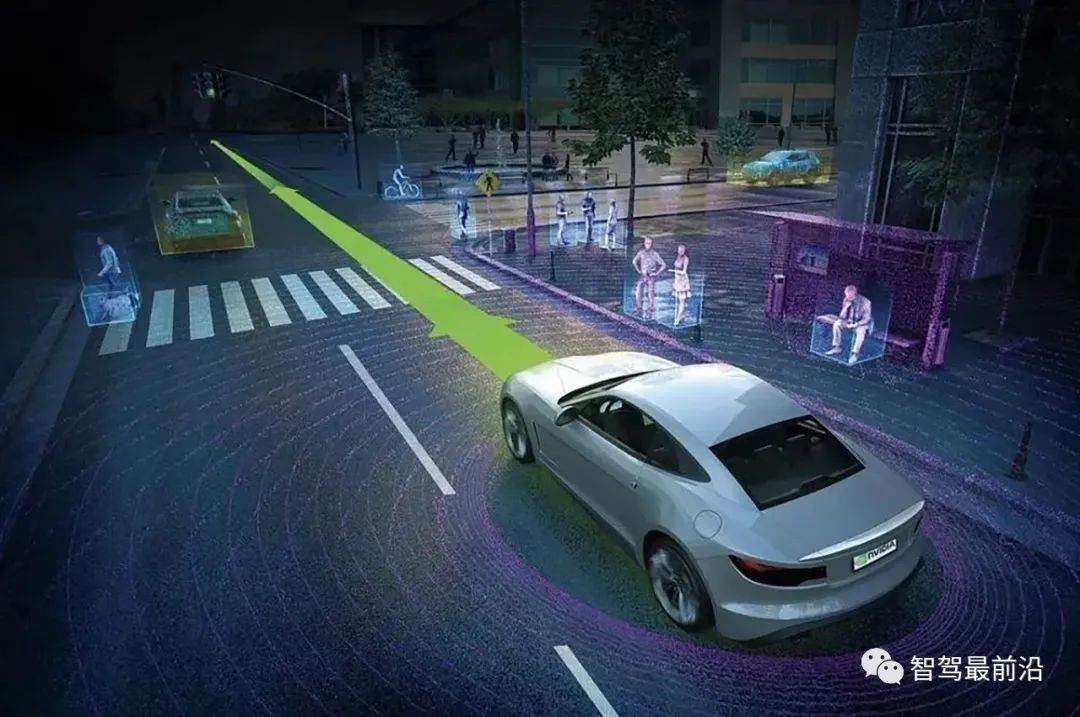

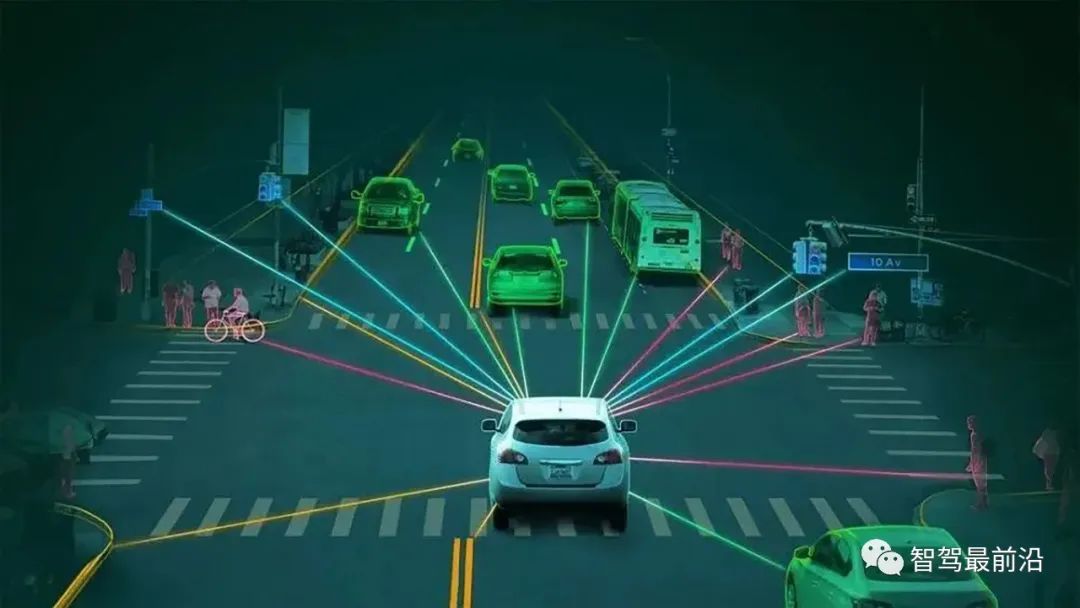

"Autonomous driving extensively utilizes artificial intelligence technology," said Thierry Koulton, Rambus Security IP Technology Product Manager. "Autonomous driving, even entry-level ADAS functions, require vehicles to exhibit a level of environmental awareness comparable to or better than human drivers. Firstly, vehicles must recognize other vehicles, pedestrians, and roadside infrastructure, and determine their correct positions. This requires AI deep learning technology to effectively address pattern recognition capabilities. Visual pattern recognition is an advanced deep learning field widely used by vehicles. In addition, vehicles must always be able to calculate their optimal trajectory and speed. This requires artificial intelligence to also effectively address route planning capabilities. In this way, LiDAR and radar can provide distance information, which is crucial for correctly reconstructing the vehicle environment."

Sensor fusion, which combines information from different sensors to better understand the vehicle environment, is still an active research field.

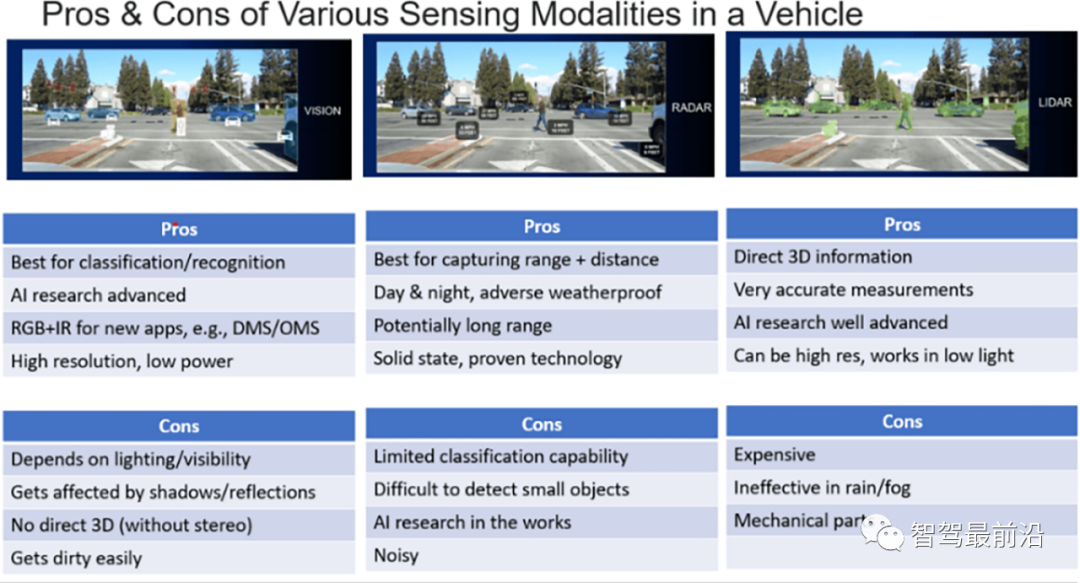

"Each type of sensor has its limitations," Koulton said. "Cameras are very suitable for object recognition, but they provide poor distance information, and image processing requires a lot of computing resources. In contrast, LiDAR and radar provide excellent distance information, but with poor clarity. In addition, LiDAR cannot work well in harsh weather conditions."

How many sensors are needed exactly?

There is no simple answer to the question of how many sensors the auto drive system needs. Original equipment manufacturers are currently attempting to address this issue. Other considerations here include, for example, the demand for trucks driving on open roads and urban robot taxis is very different.

"This is a difficult calculation because each automotive OEM has its own architecture that protects vehicles by providing better spatial positioning, longer distances and high visibility, as well as the ability to recognize and classify objects, and then differentiate between various objects," said Amit Kumar, Director of Product Management and Marketing at Cadence. "This also depends on the level of autonomy that car manufacturers decide to adopt (for example, to provide breadth). In short, to achieve partial autonomy, the minimum number of sensors can be 4 to 8 of various types. To achieve complete autonomy, 12+sensors were used today."

Kumar pointed out that in the TSLA case, there were 20 sensors (8 camera sensors plus 12 level 3 or lower ultrasonic sensors) without LiDAR or radar. The company firmly believes in computer vision and its sensor kit is suitable for L3 Autonomous. According to media reports, Tesla may introduce radar to improve autonomous driving.

Zoox has implemented four LiDAR sensors, as well as a combination of cameras and radar sensors. This is a fully autonomous vehicle with no driver inside, aiming to operate on clear and easily understandable routes on the map. Commercial deployment has not yet begun, but soon there will be a limited use case (unlike passenger cars).

Nuro's autonomous delivery truck, aesthetics are not that important. It uses a 360 degree camera system with four sensors, along with a 360 degree LiDAR sensor, four radar sensors, and ultrasonic sensors.

There is no simple formula for implementing these systems.

"The number of sensors required is an acceptable level of risk for the organization and also depends on the application," said Chris Clark, Senior Manager of Automotive Software and Security at Synopsys Automotive Group. "If robot taxis are being developed, they need not only sensors for road safety, but also sensors inside the vehicle to monitor the behavior of passengers in the vehicle to ensure the safety of passengers. In this case, we will be in an area with a large population and high urban density. This area has quite unique characteristics, rather than vehicles used for highway driving, where you have a longer distance and greater reaction space. On the highway, the possibility of intruding into the road is small. I don't think there is a fixed rule that you must have three different types of sensors and three different cameras to cover the different angles of all autonomous vehicle."

However, the number of sensors will depend on the use cases that the vehicle will be addressing.

"In the example of a robot taxi, it is necessary to use LiDAR and conventional cameras, as well as ultrasound or radar, because there is too much density to process," Clark said. In addition, we need to include a sensor for V2X, where the data flowing into the vehicle will be consistent with what the vehicle sees in the surrounding environment. In road truck transportation solutions, different types of sensors will be used. Ultrasound is not as beneficial on highways unless we are doing something similar to teaming up, but it is not a forward-looking sensor. Instead, it may be a forward and backward looking sensor so that we can connect to all team assets. However, LiDAR and radar have become more important because the distance and range that must be considered when getting stuck on highways.

Another factor to consider is the level of analysis required. "There is so much data to process that we must decide how much data is important," he said. This is where sensor types and functions become interesting. For example, if LiDAR sensors can perform local analysis in the early stages of the cycle, this will reduce the amount of data flowing back to sensor fusion for other analyses. Reducing the amount of data in turn will lower overall computing power and system design costs. Otherwise, vehicles will need to perform additional processing in the form of integrated computing environments or dedicated ECUs focused on sensor grid partitioning and analysis.

Cost has always been an issue

Sensor fusion may be expensive. In the early days, the cost of a LiDAR system consisting of multiple units could reach up to $80000. The high cost comes from the mechanical components in the device. Today, the cost is much lower, and some manufacturers predict that at some point in the future, it may be as low as $200 to $300 per unit. The emerging thermal sensor technology will cost around a few thousand dollars.

Overall, OEM manufacturers will continue to face pressure to reduce sensor headquarters deployment costs. Using more cameras instead of LiDAR systems will help OEMs reduce manufacturing costs.

"The basic definition of safety in urban environments is to eliminate all avoidable collisions," said David Fritz, Vice President of Siemens Digital Industrial Software Hybrid and Virtual Systems. The minimum number of sensors required depends on the use case. Some people believe that in the future, smart city infrastructure will become complex and ubiquitous, thereby reducing the demand for in vehicle sensors in urban environments.

Car to car communication may also have an impact on sensors.

"Here, the number of onboard sensors may decrease, but we haven't," Fritz observed. "In addition, there will always be situations where AV will have to assume that due to power failure or other power outages, it is not possible to obtain all external information. Therefore, some sensors will always need to be installed on the vehicle - not only suitable for urban areas, but also for rural areas. Many designs we have been researching require eight external cameras and several internal cameras on the vehicle. By properly calibrating the front two cameras, we can achieve low latency, high-resolution stereoscopic vision, provide a depth range of objects, and reduce the need for radar. We do this at the front, rear, and both sides of the vehicle to obtain a complete 360 ° perspective."

As all cameras perform object detection and classification, key information will be transmitted to the central computing system to make control decisions.

"If infrastructure or other vehicle information is available, it will be fused with information from onboard sensors to generate more comprehensive 3D views for better decision-making," Fritz said. "Internally, additional cameras are used for driver monitoring and detecting occupancy conditions such as left behind objects. A low-cost radar may be added to handle harsh weather conditions such as heavy fog or rain, which is an advanced supplement to the sensor kit. We have not seen a significant use of LiDAR recently. In some cases, LiDAR performance can be affected by echoes and reflections. Initially, autonomous driving prototypes heavily relied on GPU processing of LiDAR data, but recently, more intelligent architectures tend to favor high-resolution, high FPS cameras, and their distributed architecture can better optimize the data flow of the entire system."

Optimizing sensor fusion can be complex. How do I know which combination can provide the best performance? In addition to conducting functional testing, OEMs also rely on modeling and simulation solutions provided by companies such as Ansys and Siemens to test the results of various sensor combinations for optimal performance.

Enhancing the Impact of Technology on Future Sensor Design

Enhanced technologies such as V2X, 5G, advanced digital maps, and GPS in intelligent infrastructure will make autonomous driving possible with fewer in vehicle sensors. But to improve these technologies, autonomous driving will require the support of the entire automotive industry and the development of smart cities.

"Various enhanced technologies serve different purposes," said Frank Schirrmeister, Vice President of IP Solutions and Business Development at Arteris. "Developers often combine the two to create a safe and convenient user experience. For example, map information digital twins used for path planning can create a safer experience under limited visibility conditions to enhance sensor based local decision-making in the car. V2V and V2X information can supplement locally available information in the car to make security decisions, increase redundancy, and create more data points as the basis for security decisions."

In addition, the Internet of Vehicles has the potential for real-time collaboration between vehicles and roadside infrastructure, which requires technologies such as ultra reliable low latency communication (URLLC).

"These requirements have led to the application of various artificial intelligence technologies in traffic prediction, 5G resource allocation, congestion control, and other areas," Kouthon said. "In other words, AI can optimize and reduce the heavy impact of autonomous driving on network infrastructure. We expect original equipment manufacturers to use software defined vehicle architecture to build autonomous vehicle, where ECU is virtualized and updated via wireless. Digital twin technology is crucial for testing software and updating on vehicle cloud simulation that is very close to real vehicles."

conclusion

At the final implementation, L3 level autonomous driving may require 30+sensors or a dozen cameras, depending on the OEM architecture. However, there is currently no consensus on which is safer, or whether the autonomous driving sensor system will provide the same level of safe driving in urban environments as driving on highways.

As the cost of sensors decreases in the coming years, it may open the door to new sensors that can be added to the combination to improve safety in adverse weather conditions. However, OEM manufacturers may need a long time to standardize a certain number of sensors, which are considered sufficient to ensure safety under all conditions and extreme situations.

Source: The forefront of intelligent driving