The development trend of AR technology is twofold: firstly, it is gradually approaching the form of ordinary glasses; secondly, it is optical perspective+AR display hardware with larger field of view; thirdly, the development direction of in car AR is to transfer the components to the car end as much as possible.

Zhang Dingyong, Senior Director of the Delivery Department of NIO Automotive Cockpit, believes that the value and application of AR lies in efficiency tools. By providing additional digital information, it helps users better perceive the real environment and make decisions, such as AR HUD, AR extended screen, AR object recognition, AR education. Virtual objects need to be integrated with real space, such as HoloLens, Magic Leap, and Vision Pro; The second is content entertainment and immersive viewing, such as NIO PanoCinema, where virtual objects do not need to blend with real space.

張定勇 | 蔚來汽車座艙交付一部高級總監(jiān)

At the same time, he stated that AR technology is divided into three directions: head mounted displays, video perspective, and optical perspective. The development trend of AR technology is twofold: firstly, it is gradually approaching the form of ordinary glasses; secondly, it is optical perspective+AR display hardware with larger field of view; thirdly, the development direction of in car AR is to transfer the components to the car end as much as possible.

The following is a summary of the speech content:

01AR Automotive Applications and Technologies

The value and application of AR can be mainly divided into two categories. The first category is efficiency tools, which help users better perceive the real environment and make decisions by providing additional digital information. Typical application scenarios include AR-HUD, AR extended screen, AR object recognition, AR education, etc., which can achieve the fusion of virtual objects with real space (such as Hololens, Magic Leap, Vision Pro); The second type is content entertainment, which includes immersive viewing, such as NIO PanoCinema, and virtual objects do not need to blend with real space.

AR in car scenarios include immersive viewing, AR extended screens, AR-HUD, AR navigation, AR remote rescue, AR car prototype design, etc., all of which are corresponding integrated applications in the field of in car technology. The AR expansion screen can expand the interaction space beyond the real physical screen inside the car.

The development trend of AR technology can be divided into three stages. The first stage is the head mounted display screen, which presents an ubiquitous screen right in front of us. The first generation product was Google Glass; The second is video perspective, which is mainly based on two-dimensional image calculation. Typical products are HTC Vive and Quest Pro. Quest Pro can overlay computer-generated virtual object images onto camera captured real scene images and real-time video streams. Its software algorithms are relatively mature, mainly the image processing of traditional CV algorithms. After detecting a plane, the corresponding virtual objects are converted and overlaid onto it; The third type is optical perspective, which involves spatial computing. Typical products include Hololens and Magic Leap.

02 Optical Perspective OST

Regarding optical perspective OST, let's first take a look at its background knowledge.

OST technology uses the principle of binocular ranging. The human eye can perceive distance through the left and right eyes because there is parallax between the two eyes. The three points with different distances have the same position when viewed with the right eye, but there are differences when viewed with the left eye. Through camera simulation, it can be seen that these three points project at different positions on the surface, and based on this difference, the current distance of the object can be obtained.

The camera L and camera R below have two similar triangles. f is the focal length, b is the distance between the pupil and the left and right cameras, and xl and xr are the positions of the points imaged on the phase plane. Finally, the results are obtained through equations. The difficulty of stereo vision lies in the difficulty of detecting which two points in the left and right eye images are the same. Another type is structured light ranging, which is calculated using the same principle as binocular ranging. A specific pattern is emitted from the position of one eye, and the receiving end of the camera calculates the distance based on the current position of the pattern on the image plane. The result is relatively accurate.

The first step that needs to be completed in AR OST is calibration, which mainly involves two conversion relationships: camera image to physical world conversion, camera to human eye, and display screen relative pose calculation.

The second step is SLAM mapping, which mainly includes synchronous positioning and mapping. The first step is mapping, and in spatial calculation, ranging is the most crucial step. It is possible to use binocular cameras, structured light cameras, LiDAR lidars, and infrared lasers for depth calculation, and then obtain depth maps of three-dimensional scenes; During the movement process, the relative displacement is obtained by combining the IMU on the shooting device, and the depth map results are continuously corrected through continuous relative displacement and calculation; Finally, scan the environment and reconstruct the 3D scene and coordinates in the current world coordinate system.

The next step is SLAM positioning. After understanding the coordinates of the current physical environment in the world coordinate system and mastering the feature points of the current environment, such as the lights on the wall, the obtained absolute physical coordinates can be stored in the current VR device to perform SLAM positioning.

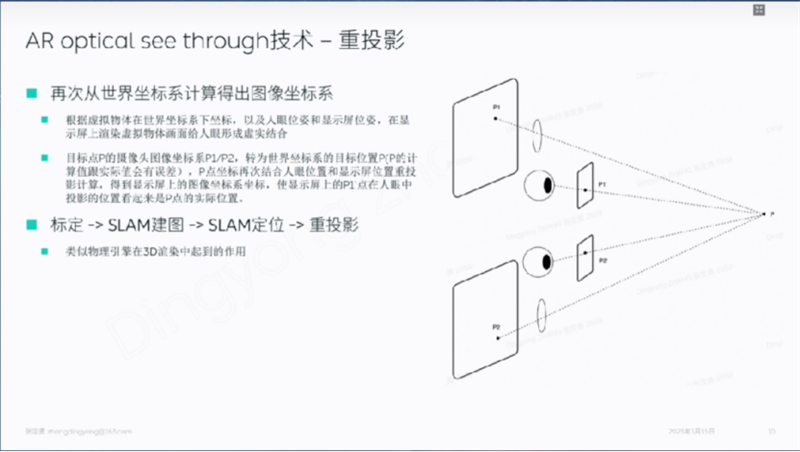

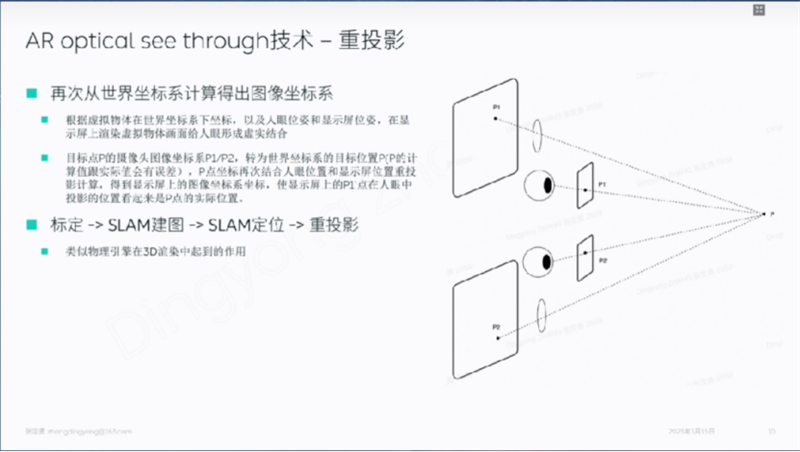

The final step of OST technology is reprojection. The reason for doing reprojection is that it requires overlaying virtual objects onto real space.

Image source: Guest speech materials

To achieve the fusion and overlay between virtual objects and the actual physical world observed. VST has many mature technologies, such as feature detection. The reason why such a complex process is needed is because it can make the results more realistic. This is similar to the role played by physics engines in 3D rendering.

03AR Technology Breakthrough

The breakthrough points of AR technology include weight, dizziness, price, and development direction.

Firstly, the weight includes the battery, camera, and heat dissipation. The weight of the battery accounts for a considerable proportion, and changing the battery to an external one is also a clever method. If you have a camera, you must have a chip, which increases weight. Similarly, chips also bring heat sinks, which are also a source of some weight. In addition, both MR and VR require full coverage packaging, which will also result in weight overlap.

The second one is dizziness. Including focal length misalignment, image jitter and delay, and OST virtual real combination error. In addition to binocular parallax, when we observe actual objects, the basis for judging distance is the focal length, which is also a secondary factor for human perception of distance. Existing VR and AR devices cannot achieve this because the focal length is fixed on the display screen, and after prolonged observation, there may be focal length misalignment, also known as convergence adjustment conflict. In addition, there is currently a delay in the generation and rendering of virtual objects in AR and VR, while VST, including actual physical and virtual images, is based on camera generation, so there is also a delay, which can cause dizziness and discomfort when moving. At present, some products have reduced the delay to 10 millisecond to 12 millisecond. The combination of virtual and real errors in OST is an important part, and reducing errors is a challenge.

The third is the price. Including the number of cameras and computing chips, the current prices are relatively high. But in the long run, it's not a problem because hardware prices are constantly decreasing. For the application of expanding virtual screens in vehicles, a lower cost solution is required because the vehicle scenes have special characteristics.

The key to the development direction of AR technology is to gradually approach the shape of ordinary glasses in order to wear them for a long time and truly change life and the world. Because in real life, it is inevitable to interact with people, and it is not realistic to repeatedly take them off and put them on; Secondly, there are optical perspective and AR display hardware with a larger market angle, because currently AR display technology is limited in the market angle, basically at 70 degrees, while VR can usually reach above 100 degrees, which will limit the landing of OST; In the direction of the car, the components should be transferred to the end of the car as much as possible to reduce the weight of the glasses. Due to different environments, in car scenarios have unique advantages.

In the car scenario, you can see the application of the car mounted expansion screen. Outside the physical screen, a virtual screen is attached next to it. The key point is how to achieve stable fit, so that no matter how the eyes move, they can fit stably next to the physical screen. A simple method is to arrange multiple infrared laser points inside the car as it is a rigid body. With a monocular camera, the position of the current camera can be calculated based on the positions of the three points and the projected position of the current camera. The projection can then be carried out and attached to the physical space inside the car, with very low computational cost. This is the advantage of being in a car.

Vision Pro is a very powerful and great product in the industry. Its biggest innovation comes from the new interaction paradigm. Although eye tracking and gesture technology have existed for a long time, Vision Pro's biggest innovation is to combine these two technologies to create a new way of interaction, which is very natural and has no learning cost.

At the display level, Vision Pro uses VST, and at the sensor and computing power levels, it has OST and spatial computing capabilities, but it does not use OST at the display level. Sensors and computing power have the spatial computing power of OST, so it is currently relatively difficult to reduce prices.

Regarding camera ranging, LiDAR is suitable for long-range ranging, even if it is far away, the accuracy will not be greatly affected. The True Depth camera has a higher resolution and is suitable for achieving high accuracy at close range. Apple has chosen these two combinations to simultaneously balance close range and long-range ranging, completing SLAM mapping.

In terms of display hardware, the weight and thickness of PanCake modules will decrease. Vision Pro has made up for the shortcomings of OST in terms of resolution and latency. The reason for not choosing OST may be that it requires support for both AR and VR modes in order to have more application scenarios. The current market for OST display technology is limited and cannot support VR scenes well. It is a very clever approach to first configure the hardware with OST capability and provide mature technical support for AR and VR applications.

Future development of 04AR and VR

Image source: Guest speech materials

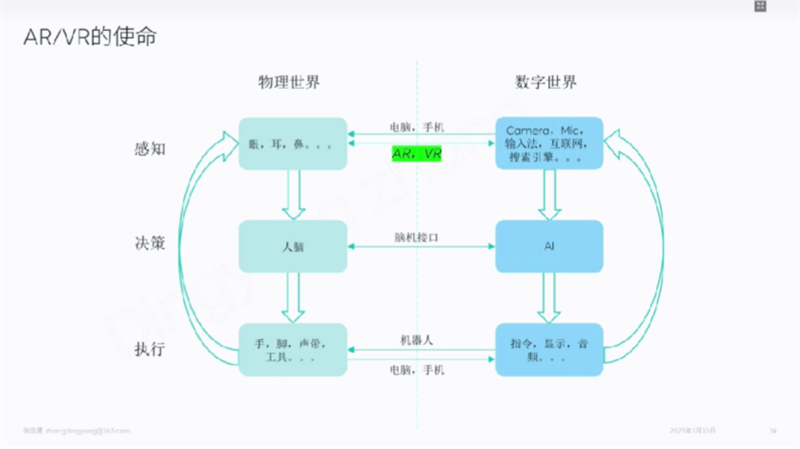

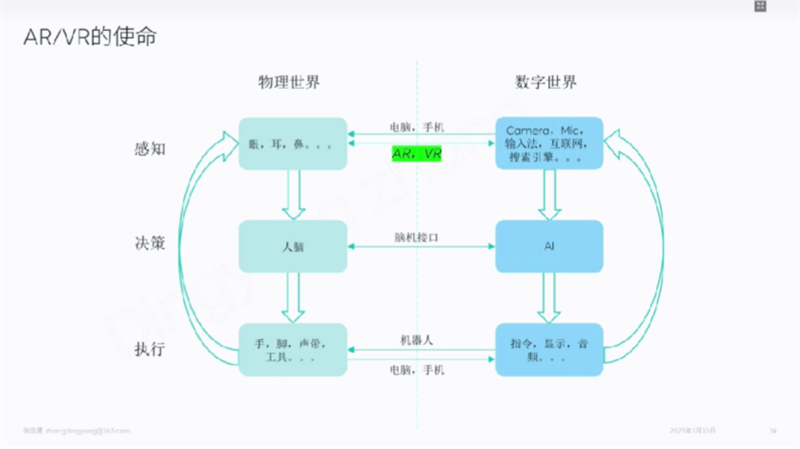

Human beings have existed for hundreds of thousands of years, and their development has always been in the small C-loop physical world on the left. The digital world was not formed until recent decades, and it also includes perception, decision-making, and execution. However, the development of AI at the current level is relatively early. The current perception of the digital world, the physical world, the decision-making of human intelligence, and finally the execution of the physical world and the digital world is a big C-shaped cycle.

Therefore, it is now necessary to complete more connections between the digital world and the physical world, mainly from the perception layer and the execution layer. The connection of the perception layer is currently between computers and mobile phones, transmitting digital information to the digital world. Currently, the digitization of the physical world has already been partially achieved, such as most transactions have been completed in the digital world, and texts and books have also been digitized, but still occupy a small proportion. Computers and mobile phones currently serve as bridge interfaces. The connection between the execution layer is also between the computer and the mobile phone. After the human brain determines the intention, it inputs it to the computer, allowing it to execute more in the digital world.

With the development of AI, there will be more interfaces between these two worlds in the future. The digital world can bring significant improvements to productivity, which is an absolute driving force. With the development of AI, interfaces may emerge at different levels, including the decision-making and execution layers. The decision-making layer may have new interfaces in the future, and the execution layer may also use robots to map the digital world back to the physical world for execution.

It is uncertain whether these two worlds will form an 8-word cycle or become a small D-word cycle on the right in the future. However, it can be determined that the current physical world is a three-dimensional space, while the future digital world is not just three-dimensional, as it can be traced back in time and at least a four-dimensional or higher dimensional world.

The connection between the 3D world and the high-dimensional world is still a bottleneck, as current computers and phones still have 2D interfaces. It is uncertain whether AR and VR can replace computers and mobile phones, but a new form will definitely emerge. Currently, AR and VR can not only project information from the digital world into the current physical world. On the contrary, information from the current physical world can also be transformed into digital assets in the digital world through methods such as shooting distance measurements.

(The above content is from Zhang Dingyong, Senior Director of NIO Automotive Cockpit Delivery Department, who delivered a keynote speech on "AR Technology Application Trends" at the 2023 Intelligent Cockpit Vehicle Display and Perception Conference from July 11-12, 2023.)

Note: This article (including images) is a reprint, and the copyright of the article belongs to the original author. If there is any infringement, please contact us to delete it.